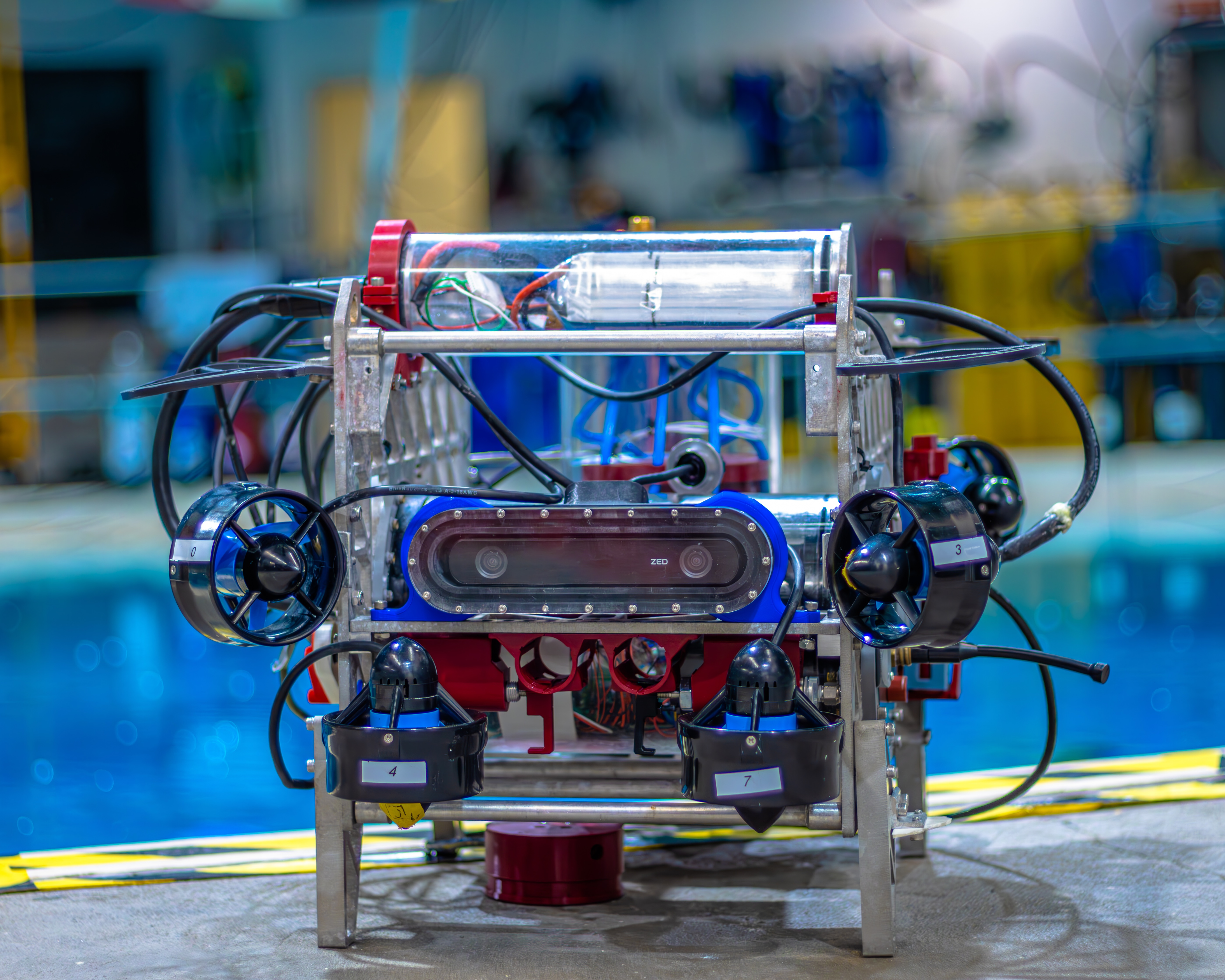

Meet Qubo!

Qubo: University of Maryland's competition AUV

Qubo: University of Maryland's competition AUV

Qubo is Robotics @ Maryland's flagship autonomous underwater vehicle, purpose-built for the demands of international RoboSub competition. Representing years of iterative design and engineering across mechanical, electrical, and software disciplines, Qubo embodies the team's commitment to pushing the boundaries of underwater autonomy.

Competition Overview

RoboSub is an international autonomous underwater vehicle competition where teams design, build, and program robots to complete underwater tasks without human intervention. As a member of Robotics @ Maryland from 2022-2024, I served as Perception Lead on the software team, developing and overseeing the computer vision pipeline that enables our UAV, Qubo, to detect, classify, and localize competition elements.

In the 2024 competition held in San Diego, California, our team placed 11th out of 40+ teams from around the world. This is a significant achievement showcasing the effectiveness of our integrated perception, navigation, and control systems.

Qubo operating in the competition pool during RoboSub 2024