Project Collection Overview

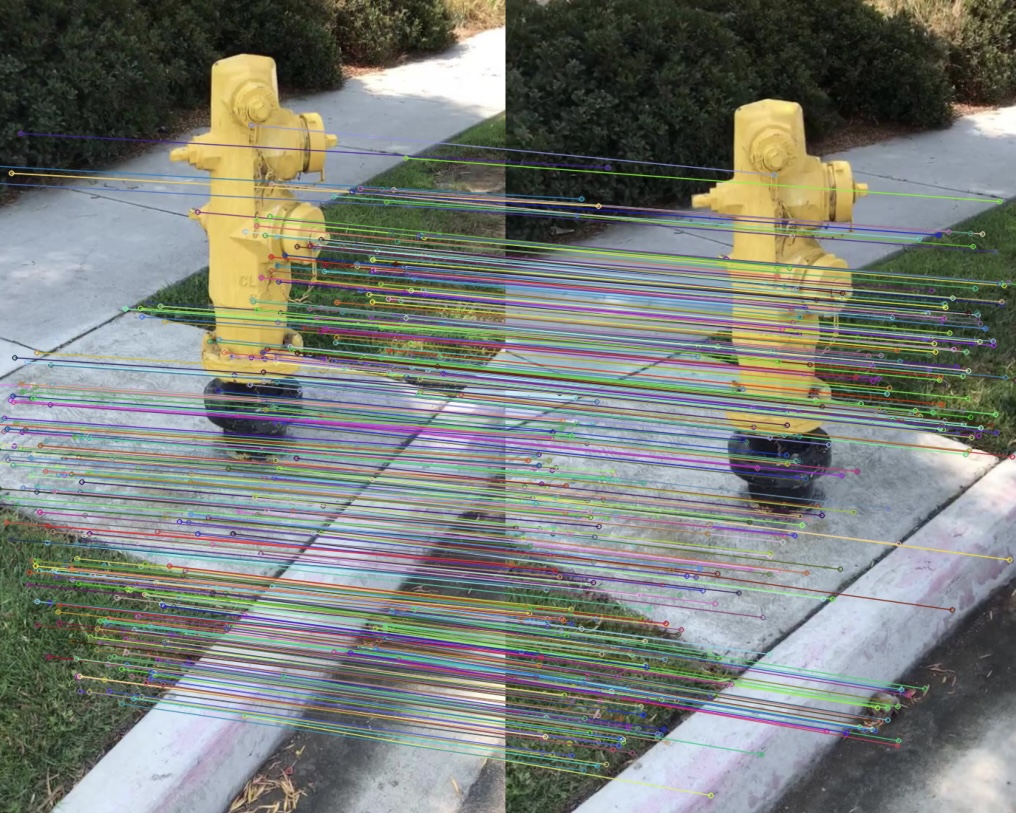

This portfolio showcases four advanced computer vision projects I completed, demonstrating proficiency across the fundamental pillars of computer vision: segmentation, image stitching, 3D reconstruction, and depth estimation. Each project tackles a distinct challenge in perception, from detecting objects in images to reconstructing 3D scenes from 2D photographs.

These projects demonstrate both theoretical understanding (implementing algorithms from research papers) and practical engineering (handling real-world data, edge cases, and performance optimization).

Overview of the four computer vision domains explored

Overview of the four computer vision domains explored